The Exponential Power Surge: How AI's Insatiable Appetite for Computing Is Reshaping Global Infrastructure

- Mac Bird

- Jul 14, 2025

- 9 min read

The Staggering Scale of Growth

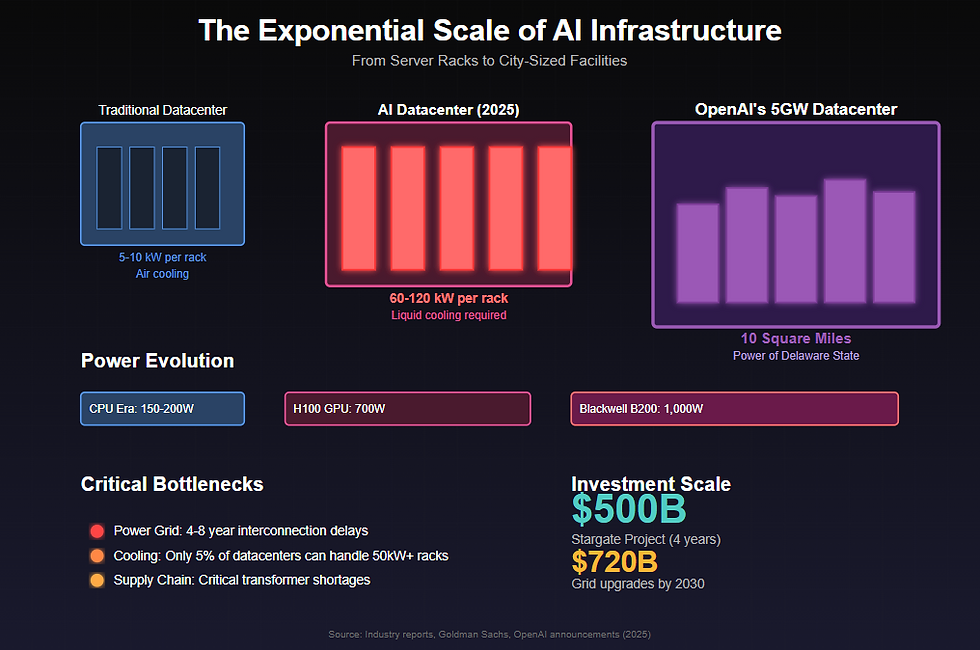

The numbers emerging in mid-2025 are almost incomprehensible. Goldman Sachs Research estimates that there will be around 122 GW of data center capacity online by the end of 2030, representing a 165% increase from current levels [1]. AI data centers could need 68 GW in total by 2027 — almost a doubling of global data center power requirements from 2022 and close to California's 2022 total power capacity of 86 GW [2].

This isn't just incremental growth — it's an exponential explosion. Total AI computing capacity, measured in floating-point operations per second (FLOPS), has also been growing 50% to 60% quarter on quarter globally since the first quarter of 2023 [3]. The transformation is being driven by a fundamental shift in computing requirements: Nvidia's H100 GPUs have a thermal design point (TDP) of 700W, while the B200 chip's TDP reaching 1,000W, and The NVIDIA Blackwell configurations are anticipated to require between 60kW to 120kW per rack — a massive leap from traditional datacenter designs [4,5].

The Square Mile Ambition: OpenAI's Vision Meets Reality

Perhaps nothing illustrates the audacious scale of AI infrastructure ambitions better than OpenAI's current mega-projects. The company isn't thinking in terms of buildings or even campuses — it's thinking in terms of small cities.

OpenAI is poised to help develop a staggering 5-gigawatt data center campus in Abu Dhabi, positioning the company as a primary anchor tenant in what could become one of the world's largest AI infrastructure projects. The facility would reportedly span an astonishing 10 square miles and consume power equivalent to five nuclear reactors [6].

Five gigawatts is an astonishingly large amount of power. To put this in perspective, a single 5GW datacenter would consume roughly the same amount of power as the entire state of Delaware uses annually. According to reporting from Bloomberg in late 2024, Constellation Energy CEO Joe Dominguez told Bloomberg that he had heard Altman wanted five to seven such data centers [7].

The Stargate project represents OpenAI's domestic ambitions. The Stargate Project is a new company which intends to invest $500 billion over the next four years building new AI infrastructure for OpenAI in the United States. We will begin deploying $100 billion immediately [8]. As of July 2025, OpenAI executives said it sent out a request for proposals (RFP) to states less than a week ago and is actively considering 16 states including Arizona, California, Florida, Louisiana, Maryland, Nevada, New York, Ohio, Oregon, Pennsylvania, Utah, Texas, Virginia, Washington, Wisconsin and West Virginia [9].

The Bottlenecks: When Ambition Meets Physics

1. Power Grid Constraints

The most immediate and severe bottleneck is electrical power. In Georgia, demand for industrial power is surging to record highs, with the projection of new electricity use for the next decade now 17 times what it was only recently [10]. The infrastructure challenges are mounting:

Transmission Delays: All six grid operators have reported they cannot meet the deadline for upgrading transmission systems by July 2025. The California Independent System Operator (CAISO) told FERC it may require until late 2027 to comply fully [11]

Multi-Year Backlogs: Multi-year interconnection delays, with advanced economies like the U.S. and parts of Europe seeing 4- to 8-year backlogs for new grid tie-ins [12]

Infrastructure Age: Much of the infrastructure supporting electricity transmission in the US and Europe was built in the 1970s and 1980s [13]

2. Cooling Challenges

As power density skyrockets, traditional cooling methods are reaching their limits:

The TDP of the GB200 NVL36 and NVL72 complete rack systems is projected to reach 70kW and nearly 140kW, respectively, necessitating advanced liquid cooling solutions for effective heat management [14]

Blackwell configurations will require 60 kW to 120 kW per rack but fewer than 5% of the world's data centers are capable of supporting even 50kW per rack [15]

22% of data centers already have liquid cooling systems in place, with that figure expected to grow rapidly [16]

3. Supply Chain Constraints

The rapid scaling has exposed critical supply chain vulnerabilities:

Chip Production: In 2025, GB200 NVL36 shipments will reach 60,000 racks, with Blackwell GPU usage between 2.1 to 2.2 million units [17]

Equipment Shortages: Severe supply chain bottlenecks for essential hardware—including large-format transformers, gas turbines, and switchgear—are further eroding lead time certainty [18]

Labor Scarcity: In Abilene, Stargate construction has contributed to a local shortage of electricians for commercial jobs [19]

4. Geographic Concentration

Nearly half of all U.S. data center capacity clustered in just five regions, intensifying local stress on transmission and distribution infrastructure. This concentration creates severe local bottlenecks even as other regions have available capacity [20].

China's Parallel Universe: Different Challenges, Similar Scale

China's AI infrastructure ambitions face a unique set of constraints shaped by geopolitics and central planning.

Computing Power Comparison

By June 2024, China had 246 EFLOP/s of total compute capacity—including both public and commercial data centers—and aims to reach 300 EFLOP/s by 2025 [21]. For comparison, the US accounted for 32% of the world's total computing power of 910 EFLOPS as of the end of last year, while China held 26% [22]. This means the US has approximately 291 EFLOPS compared to China's 237 EFLOPS, maintaining a significant but narrowing lead.

The Build-Out Boom and Bust

China's approach has been characteristically ambitious but increasingly challenged. In 2024 alone, over 144 companies registered with the Cyberspace Administration of China—the country's central internet regulator—to develop their own LLMs. Yet according to the Economic Observer, a Chinese publication, only about 10% of those companies were still actively investing in large-scale model training by the end of the year [23].

The infrastructure buildout tells a similar story:

In 2023 alone, more than 500 data center projects were proposed nationwide [24]

By the end of 2024, the excitement that once surrounded China's data center boom was curdling into disappointment [25]

Facilities that cost billions of dollars now sit underused, returns are falling, and the market for GPU rentals has collapsed [26]

The Sanctions Squeeze

U.S. export controls continue to create critical bottlenecks for China's AI ambitions:

Project approval documents show that in the fourth quarter of 2024, local governments in Xinjiang and in neighboring Qinghai province green-lit a total of 39 data centers that intend to use more than 115,000 Nvidia processors — all banned from export to China [27]

Some Chinese organizations were found to be buying or trying to buy access to export-controlled chips such as Nvidia's high-end GPUs operating from datacenters in the US, neatly side-stepping the restrictions [28]

The efficiency breakthrough of DeepSeek demonstrates adaptation: Chinese LLM player DeepSeek reported that its V3 model achieved substantial improvements in training and reasoning efficiency, notably reducing training costs by approximately 18 times and inferencing costs by about 36 times, compared with GPT-4o [29]

The Path Forward: Innovation Under Pressure

The collision between exponential demand and physical constraints is forcing unprecedented innovation:

Behind-the-Meter Power

62% of data centers are exploring on-site power generation to boost energy efficiency or resilience. Nearly one-fifth (19%) of those surveyed said they were already implementing some form of behind-the-meter power by the end of 2024 [30]. Solutions include:

Small modular reactors (SMRs) with deployment expected by 2030 [31]

Direct connections to existing nuclear plants [32]

Massive renewable installations with battery storage

Distributed Training

Google, OpenAI, and Anthropic are already executing plans to expand their large model training from one site to multiple datacenter campuses. Google's infrastructure demonstrates the scale: Google has 2 primary multi-datacenter regions, in Ohio and in Iowa/Nebraska with plans for gigawatt-scale clusters [33].

Efficiency Breakthroughs

The latest generation of chips promises dramatic efficiency gains. Blackwell will support double the compute and model sizes with new 4-bit floating point AI inference capabilities and Blackwell provides 3 times to 5 times more AI throughput in a power-limited data center than Hopper [34].

The Trillion-Dollar Infrastructure Race

The scale of investment required continues to escalate. $500 billion in labor costs is roughly equivalent to 12 billion labor hours (six million people working full time for an entire year) [35]. Goldman Sachs Research estimates that about $720 billion of grid spending through 2030 may be needed just for electrical infrastructure upgrades [36].

Major tech companies are responding with massive commitments:

Alibaba Group announced plans to invest over $50 billion in cloud computing and AI hardware infrastructure over the next three years, while ByteDance plans to invest around $20 billion in GPUs and data centers [37]

Deloitte's State of Generative AI in the Enterprise survey noted that enterprises have been mostly piloting and experimenting until now, suggesting enterprise spending is about to accelerate dramatically [38]

The New Reality of 2025

As we reach the midpoint of 2025, several trends have crystallized:

Liquid Cooling Becomes Mandatory

TrendForce's latest report on AI servers reveals that NVIDIA is set to launch its next-generation Blackwell platform by the end of 2024. Major CSPs are expected to start building AI server data centers based on this new platform, potentially driving the penetration rate of liquid cooling solutions to 10% [39]. With rack densities reaching 120kW, air cooling is simply no longer viable for cutting-edge AI infrastructure.

The Nuclear Renaissance

The power demands are driving a fundamental shift in energy sourcing. Multiple nuclear PPAs were signed in 2024 involving active nuclear plants as well as decommissioned plants which will be reactivated around 2028 [40]. Amazon Web Services bought a data center in Pennsylvania that is co-located with the Susquehanna nuclear power station and Microsoft struck a deal with Constellation that will see the restart of a Three Mile Island reactor, providing 835 megawatts of carbon-free energy [41].

Geographic Diversification Accelerates

With primary markets constrained, some companies that were considering Northern Virginia for their requirements are now opting for alternative locations such as Atlanta or Columbus. Similarly, requirements in California are shifting towards cities like Las Vegas, Salt Lake City, or Denver [42].

Conclusion: The Physical Manifestation of Intelligence

We're witnessing nothing less than the physical manifestation of the AI revolution — a transformation that requires us to think about computing infrastructure not in terms of server rooms or even buildings, but in terms of facilities consuming the power of entire states.

The bottlenecks are real and severe. Power grids designed for a different era strain under loads they were never meant to handle. Cooling systems push against the laws of thermodynamics. Supply chains struggle to deliver components at the scale and speed demanded. Whatever we're talking about is not only something that's never been done, but I don't believe it's feasible as an engineer, said Constellation Energy's CEO about OpenAI's 5GW proposals [43].

Yet the momentum appears unstoppable. Despite the setbacks, central authorities remain committed to AI development — and this sentiment echoes globally. The stakes are simply too high, and the potential too transformative, to turn back now.

As we progress through 2025, the race isn't just about who can build the biggest datacenter or deploy the most GPUs. It's about who can solve the interconnected challenges of power, cooling, supply chains, and geographic distribution to create a sustainable foundation for the intelligence explosion. In this new great game, infrastructure truly is destiny.

References

[1] Goldman Sachs Research, "AI to drive 165% increase in data center power demand by 2030," February 4, 2025

[2] RAND Corporation, "AI's Power Requirements Under Exponential Growth," RR-A3572-1, 2025

[3] Deloitte, "As generative AI asks for more power, data centers seek more reliable, cleaner energy solutions," November 18, 2024

[4] The Register, "Nvidia turns up the AI heat with 1,200W Blackwell GPUs," April 1, 2025

[5] AMAX, "Top 5 Considerations for Deploying NVIDIA Blackwell," July 22, 2024

[6] TechCrunch, "OpenAI's planned data center in Abu Dhabi would be bigger than Monaco," May 17, 2025

[7] Fortune, "OpenAI reportedly wants to build 5-gigawatt data centers," October 1, 2024

[8] OpenAI, "Announcing The Stargate Project," accessed July 2025

[9] CNBC, "OpenAI considering 16 states for data center campuses as part of Trump's Stargate project," February 6, 2025

[10] The Washington Post, "Amid explosive demand, America is running out of power," October 11, 2024

[11] DCD, "US data centers face grid bottlenecks as regional operators delay upgrades," January 2025

[12] Data Center Frontier, "New IEA Report Contrasts Energy Bottlenecks with Opportunities for AI and Data Center Growth," accessed July 2025

[13] Uptime Institute Blog, "Data center operators will face more grid disturbances," June 28, 2023

[14] TrendForce, "NVIDIA Blackwell's High Power Consumption Drives Cooling Demands," accessed July 2025

[15] Navitas, "Nvidia's Grace Hopper Runs at 700 W, Blackwell Will Be 1 KW," November 28, 2024

[16] Utility Dive, "The 2025 outlook for data center cooling," January 22, 2025

[17] TrendForce, "GB200 shipments expected to reach 60,000 units in 2025," accessed July 2025

[18] Data Center Frontier, "The Power Problem: Transmission Issues Slow Data Center Growth," accessed July 2025

[19] The Washington Post, "OpenAI's $100B Stargate AI project seeks states to host giant data centers," May 10, 2025

[20] FTI Consulting, "Current Power Trends Implications Data Center Industry," April 2, 2025

[21] RAND Corporation, "Full Stack: China's Evolving Industrial Policy for AI," accessed July 2025

[22] South China Morning Post, "China beefs up computing power by 25% as AI race drives demand," January 13, 2025

[23] MIT Technology Review, "China built hundreds of AI data centers to catch the AI boom. Now many stand unused," March 26, 2025

[24] Tom's Hardware, "China's AI data center boom goes bust," March 28, 2025

[25] MIT Technology Review, March 26, 2025

[26] Tom's Hardware, March 28, 2025

[27] Bloomberg, "China Wants to Use 115,000 Banned Nvidia Chips to Fulfil Its AI Ambitions," accessed July 2025

[28] The Register, "China AI devs use cloud services to game US chip sanctions," August 23, 2024

[29] McKinsey, "The cost of compute power: A $7 trillion race," April 28, 2025

[30] Data Center Knowledge, "Data Centers Bypassing the Grid to Obtain the Power They Need," May 1, 2025

[31] JLL, "2025 Global Data Center Outlook," April 21, 2025

[32] Reuters, referenced in Brightlio, "215 Data Center Stats," June 2025

[33] SemiAnalysis, "Multi-Datacenter Training: OpenAI's Ambitious Plan To Beat Google's Infrastructure," May 13, 2025

[34] NVIDIA, "NVIDIA Blackwell Platform Arrives to Power a New Era of Computing," accessed July 2025

[35] McKinsey, April 28, 2025

[36] Goldman Sachs Research, February 4, 2025

[37] MIT Technology Review, March 26, 2025

[38] Deloitte, November 18, 2024

[39] TrendForce, accessed July 2025

[40] JLL, April 21, 2025

[41] Data Center Frontier, "The Power Problem," accessed July 2025

[42] Data Center Frontier, "The Power Problem," accessed July 2025

[43] Fortune, October 1, 2024

Comments